📓4.1: Ethics of Data Collection

Table of Contents

Ethical Issues with Data Collection

Data Privacy

📱 Your phone keeps a lot of information about you, including where you have been, what you buy, what games you play, etc. Here’s a video about the massive amounts of data our smart phones and computers collect about us:

As users, we often don’t realize how much personal data we are giving away. If you use a computer or phone at all, it means your personal privacy is at risk.

Example: Location Tracking

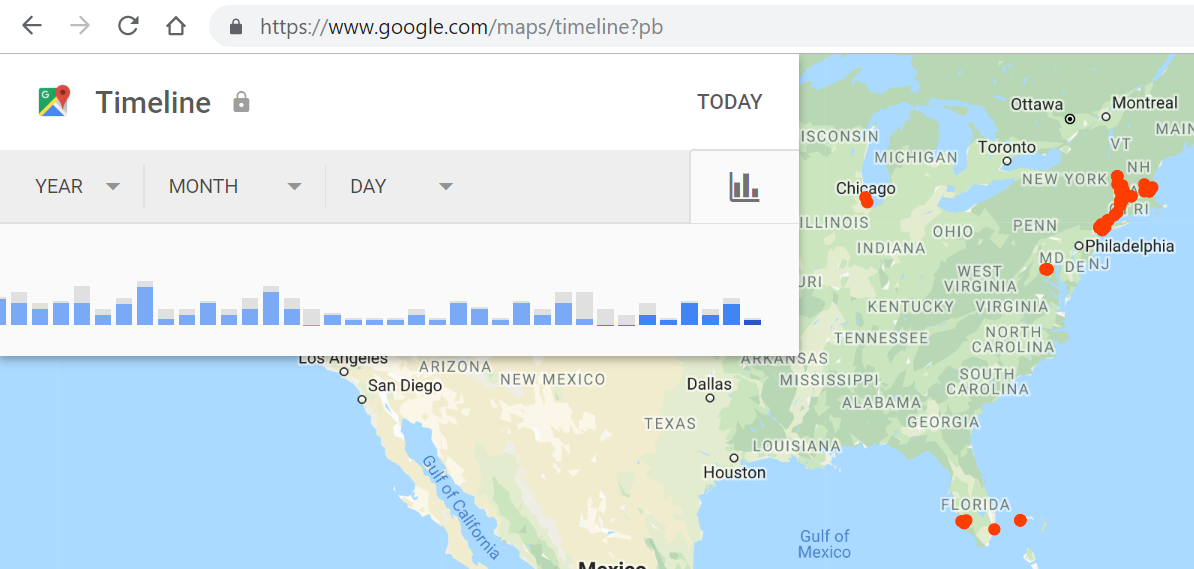

🗺️ If you have ever used your phone to give you directions to go somewhere, it probably still tracks your location data. Follow the directions in this article to see if you have location history settings on for various applications. You can always turn off location tracking, but it is useful when you want directions, it’s free… and some apps won’t work at all without location permissions!

💬 DISCUSS: Do the benefits of apps that provide driving directions outweigh the lack of privacy for you? In what situations would it be beneficial or harmful for the app to track your location?

As computer programmers, we must be aware of the risks to data privacy when our code collects and stores personal data on computer systems. The laws are slowly catching up to our technology, and many countries and states are passing laws to protect data privacy. Programmers have a responsibility to safeguard the personal privacy of the user:

- Legally and ethically, we must ask the users for permission to access and store their data.

- And if there are data breaches where the data is stolen, we must inform the affected users.

Software (computer programs, mobile apps, etc.) can have beneficial and/or harmful impacts on personal security and privacy, depending on the types of information tracked and stored.

- Location-tracking data could be useful, for example if you are lost and need to be found.

- But it could be unsafe if someone unauthorized gains access to your location.

💬 DISCUSS: Pick a popular app or web site and look into its data collecting practices. Explain the risks to privacy from collecting and storing personal data on computer systems.

📺 Here are some interesting video resources about data collection and data privacy:

- A short 1 minute video about the Facebook Cambridge Analytica incident and a longer 1 hour PBS special on Facebook’s data practices.

- What is Geo-fencing (2 mins)

- The European General Data Protection Regulation (GDPR) (3 mins)

Data & Bias

The fields of AI (Artificial Intelligence) and Machine Learning (ML) are rapidly growing and increasingly involve ethical questions about data collection, privacy, and resource use. Machine learning algorithms to create software like ChatGPT require massive amounts of data to learn from (as well as massive amounts of energy use). But where does this data come from?

- Data Collection: Often the data is collected from the internet, and the internet is full of biases. For example, if you search for professions like “programmer”, “doctor”, “CEO” in Google Images, you will probably see mostly images of white men. This reflects an existing bias in our world that AIs may learn. An AI could then generate text or images that are biased against historically underrepresented groups.

Algorithmic bias describes systemic and repeated errors in a program that create unfair outcomes for a specific group of users.

Example: Automated Hiring Algorithms

For example, a prominent tech company in 2014 started building an automated hiring tool, a resume-filtering AI system trained on their current employees resumes, and ended up with an AI system that was biased against women as described in this article. This is a problem because the AI is learning from biased data, and then creating biased outcomes that could affect people’s lives.

🎮 Try this game about algorithmic bias:

From the creators: “Survival of the Best Fit” is an educational game about hiring bias in AI. We aim to explain how the misuse of AI can make machines inherit human biases and further inequality. Much of the public debate on AI has presented it as a threat imposed on us, rather than one that we have agency over. We want to change that by helping people understand the technology, and demand more accountability from those building increasingly pervasive software systems.”

Example: Facial Recognition Software

Watch the following video about gender and racial bias in face recognition algorithms by MIT computer scientist Joy Buolamwini.

- Bias in data can lead to unfair and unethical outcomes.

- For instance, facial recognition software has been shown to have higher error rates for people with darker skin tones.

- This is because the data used to train these algorithms often contains fewer examples of people with darker skin tones. As a result, the software is less accurate for these individuals, which can lead to discriminatory practices.

💬 DISCUSS: Explain the importance of recognizing data quality and potential issues such as data bias when using a data set in AI/ML applications.

📺 Here are some other interesting videos to watch about bias in algorithms:

- AI, Ain’t I a Woman?, a poem by Joy Buolamwini

- Ted Talk video on Bias in Facial Recognition by Joy Buolamwini

- A report on police crime prediction software and bias

Programmers should be aware of the data set collection methods and identify any potential for bias before using the data to extrapolate new information or drawing conclusions.

- Some data sets are incomplete or contain inaccurate data. Using such data in the development or use of a program can cause the program to work incorrectly or inefficiently.

- Or the contents of a data set might be related to a highly specific question or topic, and might not be appropriate to give correct answers or extrapolate information for a different question or topic.

It is important for programmers and data scientists to take steps to mitigate bias in data collection and usage. This can include picking diverse and representative datasets, regularly testing algorithms for biased results, and being transparent with users about the limitations of the software.

Here are some steps that can be taken to address bias in machine learning:

- Use diverse and representative data sets to train algorithms.

- Regularly test algorithms for bias and accuracy.

- Be transparent about the limitations and potential biases of the software.

- Involve diverse teams in the development and testing of algorithms.

- Implement ethical guidelines and standards for the use of AI and machine learning.

💻 Group Activity: Dataset Critique

🔍 Go to Google Dataset Search and search for a dataset for the topic of “face recognition” (or another topic you are interested in). Find an appropriate (“good”) dataset and an inappropriate (“bad”) dataset for your topic.

🗣️ Be prepared to share:

- Explain why you believe the datasets are appropriate or inappropriate, mentioning specific considerations.

For example, one dataset might be “bad” if it is too small, incomplete, or biased.

- How the choice of your dataset could affect the results of a program that uses the data.

Summary

-

(AP 4.1.A.1) When using a computer, personal privacy is at risk. When developing new programs, programmers should attempt to safeguard the personal privacy of the user.

-

Computer use and the creation of programs have an impact on personal security and data privacy. These impacts can be beneficial and/or harmful.

- (AP 4.1.B.1) Algorithmic bias describes systemic and repeated errors in a program that create unfair outcomes for a specific group of users.

- (AP 4.1.B.2) Programmers should be aware of the data set collection method and the potential for bias when using this method before using the data to extrapolate new information or drawing conclusions.

- (AP 4.1.B.3) Some data sets are incomplete or contain inaccurate data. Using such data in the development or use of a program can cause the program to work incorrectly or inefficiently.

- (AP 4.1.C.1) Contents of a data set might be related to a specific question or topic and might not be appropriate to give correct answers or extrapolate information for a different question or topic.

Acknowledgement

Content on this page is adapted from Runestone Academy - Barb Ericson, Beryl Hoffman, Peter Seibel.